Current public AI chatbots “do not produce convincing analysis or reasoning” and could create data protection issues, judicial guidance warns

- Details

The Courts and Tribunals Judiciary has warned judges that artificial intelligence tools can produce inaccurate legal information and their use could lead to data protection issues, in a new guidance document published this week (12 December).

Providing guidance for responsible use of AI in courts and tribunals, the document calls on judges to be aware of the technology's capabilities and limitations.

The document recommends that AI tools be used as a way of obtaining non-definitive confirmation of something rather than providing immediately correct facts.

It also notes that AI chatbots appear to have been trained on material published on the internet, and therefore, their 'view' of the law "is often based heavily on US law although some do purport to be able to distinguish between that and English law".

The guidance explains that AI chatbots, like Chat GPT, Google Bard, or Bing, generate new text using an algorithm based on the prompts they receive and the data they have been trained on.

"This means the output which AI chatbots generate is what the model predicts to be the most likely combination of words (based on the documents and data that it holds as source information) [and] is not necessarily the most accurate answer," the document says.

As the tools are trained on a dataset, information generated by AI will also "inevitably" reflect errors and biases in its training data, it says.

The guidance says that the accuracy of any information judges have been provided by an AI tool must be checked before it is used or relied upon. “Information provided by AI tools may be inaccurate, incomplete, misleading or out of date. Even if it purports to represent English law, it may not do so.”

AI tools may also "make up" fictitious cases, citations or quotes or refer to legislation that does not exist”, “provide incorrect or misleading information regarding the law or how it might apply”, and “make factual errors”.

The guidance meanwhile urges judges not to enter any information that is not already in the public domain into a public AI chatbot as this essentially publishes the information "to all the world".

It says: "The current publicly available AI chatbots remember every question that you ask them, as well as any other information you put into them. That information is then available to be used to respond to queries from other users. As a result, anything you type into it could become publicly known."

On legal representatives using AI tools in their work, the guidance notes: "All legal representatives are responsible for the material they put before the court/tribunal and have a professional obligation to ensure it is accurate and appropriate.

"Provided AI is used responsibly, there is no reason why a legal representative ought to refer to its use, but this is dependent upon context."

However, it recommends judges remind lawyers of their obligations and confirm that they have independently verified the accuracy of any research or case citations that have been generated with the assistance of an AI chatbot.

The guidance also acknowledges that AI chatbots are now being used by unrepresented litigants, who "rarely have the skills independently to verify legal information provided by AI chatbots and may not be aware that they are prone to error”.

The document says that in these cases, judges should inquire and ask the litigant what checks for accuracy have been undertaken.

AI tools are now being used to produce fake material, including text, images and video, the guidance also warns. It urges judges to be aware of this new possibility and potential challenges posed by 'deepfake technology'.

The document does note areas in which AI can be "potentially useful", including summarising large bodies of text, writing presentations, and administrative tasks like composing emails and memoranda.

Adam Carey

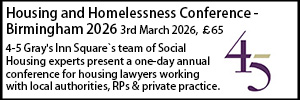

18-03-2026 1:00 pm

22-04-2026 11:00 am

01-07-2026 11:00 am