Goldilocks and the three problems: issues with the most common tender evaluation method

- Details

Hector Denfield looks at the most common method of evaluating tenders and three problems with this method and provides a workaround that goes some way to avoiding such issues.

Hector Denfield looks at the most common method of evaluating tenders and three problems with this method and provides a workaround that goes some way to avoiding such issues.

Imagine you are running a procurement with a 50%/50% weighting for price/quality, and have received three tenders. You open them up and evaluate the quality of the bids first, awarding marks up to a maximum possible score of 100. Then you look at the prices. The results look like this:

| Scenario 1 | ||

| Company | Quality | Price |

| A | 50 | £50 |

| B | 75 | £75 |

| C | 100 | £100 |

On the face of it, these three bids are evenly matched:

• Company A has a cheap and cheerful offering – the quality is not very high but their price is low.

• Company B has a middle-of-the-road offering (a “Goldilocks bid”).

• Company C has a very high quality offering with a price to match.

Which tender would you choose, if you had a free choice? Which tender do you think should win? Which tender do you think will win?

Three problems with the most common formula for scoring price

![]()

This is the most common formula we see in practice for scoring price (F1):

Here is a quick run through of three problems with this F1 formula.

Problem 1: the warping effect of exceptionally low tenders

The first problem with F1 is demonstrated nicely in Michael Bowsher QC’s article “Random Effects of Scoring Price in a Tender Evaluation”. It shows the inconsistency in results produced by different lowest priced bids. These “outlier bids” have a disrupting effect on the outcome of the procurement, and they are outside of the control of the contracting authority.

You can take this warping effect to its natural conclusion and look at the impact of zero-priced “loss leaders” in tenders with multiple elements in the price matrix. In Scenario 2 below we have asked tenderers to submit prices for widgets and dongles, and we are awarding 25% for each element. We have told tenderers that we expect to order the same quantity of widgets and dongles over the lifetime of the contract, hence the equal weighting.

| Scenario 2 | ||||

| Company | Price | Price calculation | Price score | Total score |

| A |

Widgets: £0 Dongles: £20 |

0/0 x 25% 10/20 x 25% |

25% 12.5% |

37.5% |

| B |

Widgets: £10 Dongles: £10 |

0/10 x 25% 10/10 x 25% |

0% 25% |

25% |

| C |

Widgets: £100 Dongles: £10 |

0/100 x 25% 10/10 x 25% |

0% 25% |

25% |

Company A has priced their widgets as a loss leader and will be making all their profit through the sale of dongles. Company B has split their prices evenly. Company C is objectively worse than Company B – its dongles cost the same but its widgets are grossly overpriced.

We have had to be creative when dividing zero by itself. In pure mathematical terms the result of this calculation is undefined, but the intention of the formula is to give the lowest priced bidder maximum marks, so we have awarded Company A maximum marks for widgets.

There are two perverse results here:

• Company A’s pricing and Company B’s pricing will probably lead to the same overall cost over the lifetime of the contract (because we anticipate ordering the same volume of widgets and dongles), but Company A has won the procurement by a country mile.

• Company B and Company C have both scored the same, despite Company B’s offer being undeniably better. This is because zero divided by anything is zero – behold the curious and pernicious effect of a tenderer introducing the number zero into the proceedings!

Problem 2: the prejudicial effect on “Goldilocks bids”

A Goldilocks bid is one of medium quality and medium price, like Company B’s bid in Scenario 1 above. It is briefly demonstrated in Michael Bowsher QC’s article but it is worth focussing on this Goldilocks prejudice in more detail as it may be seriously undermining the effectiveness of your procurement processes.

Let’s score the three bids received in Scenario 1:

| Company | Price | Calculation | Score |

| A | 10 | 10/10 x 50% | 50% |

| B | 20 | 10/20 x 50% | 25% |

The result is probably not what most people would have expected. The cheap-and-cheerful bid and the pricey-yet-good bid both score 75%. The Goldilocks bid is 4.2% behind both of them.

The practical implications of this are potentially enormous. As a local authority if you are setting up your procurements in this way you are favouring bids that are either low quality and low price or high quality and high price. The former is possibly not sustainable and will end up costing more in the long run if the quality is so low that the contract needs to be terminated early, whereas the latter may end up blowing budgets that are already stretched to breaking point. The Goldilocks bid, the one that is “just right”, is being squeezed out of the running.

Problem 3: the unintuitive effect of mixing absolute and relative difference formulas

The third problem with the F1 formula is the impact is has when paired with the standard approach to marking the quality element of a bid. By far the most common practice we see our clients adopting is to use the F1 formula to score price and a five point scale for scoring quality. This is a mismatch of mathematical types – the F1 formula operates on relative difference whereas the five point scale operates on absolute difference. This is further discussed below, but for now I simply want to demonstrate that this can create some really odd results.

Consider this very basic example:

- Price weighting: 45%

- Quality weighting: 55%

- Maximum possible marks for quality: 80

| Scenario 3 | |||||||

| Company | Price | Price calculation | Price score | Quality marks | Quality calculation | Quality score | Total score |

| A | 100 | 100/100 x 45% | 45% | 20 | 20/80 x 55% | 13.8% | 58.8% |

| B | 190 | 100/190 x 45% | 23.7% | 50 | 50/80 x 55% | 34.4% | 58.1% |

In my opinion, this procurement has gone badly wrong. The contracting authority sent a clear signal to the market with the higher weighting for quality (55%) over price (45%). Company B understood this, and submitted a bid that is more than double the quality for less than double the price of Company A. If you saw “double the quality, less than double the price!” written on a neon sign in a supermarket you would think it was a great deal. But in this procurement, the contracting authority would be bound to award the contract to Company A owing to the way the evaluation methodology has panned out.

What’s going on?

Why is this happening? What is causing the Goldilocks prejudice, the loss leader problem, and the unintuitive outcomes? In short, it is because the price is scored using a relative difference formula, and the quality is scored using an absolute difference formula.

The remainder of this article will address the distinction between “relative difference” and “absolute difference”, then offer a simple solution.

Relative vs absolute

An important concept when comparing two numbers is the distinction between the relative difference between the two (expressed as a percentage) and the absolute difference between the two (expressed as a plain number).

Consider Scenario 4:

| Company | Price |

| A | 10 |

| B | 20 |

The relative price difference between A and B is 100%, ie B is 100% greater than A.

The absolute price difference between A and B is 10, ie B is 10 more than A.

| Company | Price |

| A | 1,510 |

| B | 1,520 |

Here the relative difference between A and B is 0.67%, ie B is 0.67% greater than A.

The absolute difference between A and B is 10, ie B is 10 more than A.

In both scenarios the absolute difference is the same but the relative difference is quite clearly not.

Apply F1 to Scenario 4 above (assume a price weighting of 50%):

| Company | Price | Calculation | Score |

| A | 10 | 10/10 x 50% | 50% |

| B | 20 | 10/20 x 50% | 25% |

Now apply F1 to Scenario 5, again with a price weighting of 50%:

| Company | Price | Calculation | Score |

| A | 1510 | 1510/1510 x 50% | 50% |

| B | 1520 | 1510/1520 x 50% | 49.67% |

Even though Company B costs 10 more in both scenarios, its score is very different. This is because the F1 formula operates on the relative difference, not the absolute difference.

Alternative formulas

This “distance from zero” formula can mitigate the Goldilocks prejudice and the loss leader problem (F2):

![]()

This deliberately extreme example shows how F1 and F2 can produce very different results:

| Scenario 6 | |||||

| Company | Price | F1 calculation | F1 score | F2 calculation | F2 score |

| A | 0 | 0/0 x 50 | 50% | 50 - (0 x (50/50,000)) | 50% |

| B | 10 | 0/10 x 50 | 0% | 50 - (10 x (50/50,000)) | 49.8% |

| C | 50,000 | 0/50000 x 50 | 0% | 50 - (50,000 x (50/50,000)) | 0% |

You can see that F2 combats the loss leader problem.

Revisit the pricing from Scenario 1 above (an example I borrowed from Michael Bowsher QC’s article) and see again the different results produced by F1 and F2:

| Company | Price | F1 Calculation | F1 Score | F2 Calculation | F2 Score |

| A | 50 | 50/50 x 100% | 100% | 100 – (50 x (100/100)) | 50% |

| B | 75 | 50/75 x 100% | 67% | 100 – (75 x (100/100)) | 25% |

| C | 100 | 50/100 x 100% | 50% | 100 – (75 x (100/100)) | 0% |

Under both F1 and F2 Company A and Company C are 50% apart. However, Company B fares differently – under F1 it scores 33% less than Company A whereas under F2 it scores only 25% less. F2 seems fairer to me: Company B’s score is right in the middle of the three scores, which seems reasonable given that its price is also right in the middle. This shows that F2 combats the Goldilocks prejudice.

F2 is still susceptible to problems if the highest price is an outlier bid, in the same way that F1 is susceptible to problems if the lowest price is an outlier bid.

Consider this fairly typical situation (price weighting of 50%):

| Scenario 7A | |||

| Company | Price | F2 Calculation | F2 Score |

| A | 330 | 50 - (330 x (50/410)) | 9.7% |

| B | 400 | 50 - (400 x (50/410)) | 1.2% |

| C | 250 | 50 - (250 x (50/410)) | 19.5% |

| D | 290 | 50 - (290 x (50/410)) | 14.6% |

| E | 410 | 50 - (410 x (50/410)) | 0% |

So far so good: no loss leader problems, no Goldilocks prejudice. Now look at the chaos that unfolds when Company F submits an outlier bid:

| Scenario 7B | |||

| Company | Price | F2 Calculation | F2 Score |

| A | 330 | 50 - (330 x (50/5000)) | 46.7% |

| B | 400 | 50 - (400 x (50/5000)) | 46% |

| C | 250 | 50 - (250 x (50/5000)) | 47.5% |

| D | 290 | 50 - (290 x (50/5000)) | 47.1% |

| E | 410 | 50 - (410 x (50/5000)) | 45.9% |

| F | 5000 | 50 - (5000 x (50/5000) | 0% |

Now suddenly the scores for A, B, C, D and E are extremely close, rendering this part of the evaluation process pretty redundant. F’s bid has ruined everything! The F2 formula is clearly not infallible.

You can put a stop to these shenanigans by setting a maximum value for the price. The formula then looks like this (F3):

![]()

Any tender with a price greater than the “max price” scores zero. All other tenders are scored according to F3. Revisit Scenario 7B, but this time apply the F3 formula with a max price of 600 (an arbitrary but sensible figure):

| Scenario 7C | |||

| Company | Price | F2 Calculation | F2 Score |

| A | 330 | 50 - (330 x (50/600)) | 22.5% |

| B | 400 | 50 - (400 x (50/600)) | 16.7% |

| C | 250 | 50 - (250 x (50/600)) | 29.2% |

| D | 290 | 50 - (290 x (50/600)) | 25.8% |

| E | 410 | 50 - (410 x (50/600)) | 15.8% |

| F | 5000 | 50 - (5000 x (50/600) | 0% |

This gives a better result than F2. I can see three potential issues with F3:

• It relies on the contracting authority picking an appropriate max price, because if it chooses a max price that is wildly out of sync with the prices that are received then it will cause the same chaos as an outlier bid. If the contracting authority has no reasonable idea what the max price should be, for example for innovative goods or services that are not commercially available, then it should not try to guess.

• Setting a max price will cause bidders to fall victim to “anchoring”, a common cognitive bias. The result will be higher prices submitted by bidders.

• You can reverse engineer the formula to ascribe a financial value to each percent scored. In the above example, a bidder will score 50% for a price of £0, and 0% for a price of £600, so it follows that each £1 is worth 0.083%. This can then be compared to the quality element to determine a precise cost/value trade-off before the procurement even begins. Under F1 and F2 you have to wait until all the tenders have been received before the price/value trade-off becomes apparent. Some contracting authorities may not have a problem with this, but others may find it politically difficult to be the ones putting a price on sensitive public services, rather than leaving it up to the vagaries of the market and claiming it is out of their hands.

To get around issues 2 and 3 you could define the max price by applying a formula to the prices received. I would suggest “median price x 2”. Any tender that is more than double the median tender is probably an outlier and should be awarded zero marks.

Solution

The way to avoid these problems is to use the F3 formula, and optionally set the max price at double the median price. If this was applied to scenario 3, you now get something sensible (where you have an even number of numbers the median is the mean of the two middle numbers, so in this case the median is 145 and the max price is 290):

| Company | Price | Price calculation | Price score | Quality marks | Quality calculation | Quality score | Total score |

| A | 100 | 45 - (100 x (50/290)) | 29.5% | 20 | (20/80) x 55% | 13.8% | 43.2% |

| B | 190 | 45 - (190 x (50/290)) | 15.5% | 50 | (50/80) x 55% | 34.4% | 49.9% |

Conclusion

The F3 formula avoids some of the unfortunate outcomes that can result from the use of the F1 formula, as well as scoring Goldilocks bids much more fairly. This is likely to be an attractive prospect for public organisations in the austerity climate, who are looking for the bid that is “just right”.

One problem that remains throughout all of the formulas discussed in this article is that they all score prices by comparison to the other prices received. It could be argued that this is a violation of the principle of transparency that all procurements must comply with. While a tenderer may be able to reasonably ascertain the score they will receive for the quality element by comparing their product against the contracting authority’s requirements and scoring scale, there is little to no way for the tenderer to ascertain in advance what score they will get for their price. This is because their price score will be determined entirely by what bids are received from other tenderers, which they cannot know in advance. This issue is dealt with in part two of this article.

Hector Denfield is an Associate at Sharpe Pritchard. He advises public sector clients on the procurement process, as well as advising on a variety of issues arising from works and services contracts.

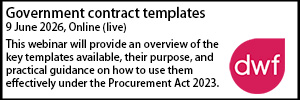

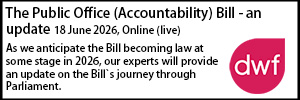

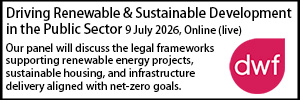

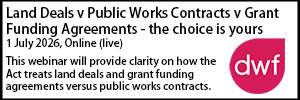

Sponsored articles

Unlocking legal talent

Walker Morris supports Tower Hamlets Council in first known Remediation Contribution Order application issued by local authority

Legal Director - Government and Public Sector

Solicitor - Contracts and Procurement

Locums

Poll

15-07-2026 11:00 am