No evidence benefits claimants subjected to harms or financial detriment from use of algorithms by local authorities: Information Commissioner

- Details

The Information Commissioner’s Office has not found any evidence to suggest that benefits claimants are subjected to any harms or financial detriment as a result of the use of algorithms or similar technologies in the welfare and social care sector, it has emerged.

The ICO launched an inquiry after concerns were raised about the use of algorithms in decision-making around benefit entitlement and in the welfare system more broadly.

In a blog this week, Stephen Bonner, Deputy Commissioner – Regulatory Supervision at the ICO, said the purpose of the inquiry was to understand the development, purpose and functions of algorithms and similar systems being used by local authorities. “We wanted to make sure people could feel confident in how their data was being handled.”

As part of this inquiry, the watchdog consulted with a range of technical suppliers, a representative sample of local authorities across the country and the Department for Work and Pensions. Overall 11 local authorities were identified through a risk assessment process to ensure a representative sample based on geographical location and those with the largest benefits workload.

Bonner said the inquiry’s findings would be fed into the ICO's wider work in this area.

Confirming that no evidence of harms or financial detriment had been found, Bonner said: “It is our understanding that there is meaningful human involvement before any final decision is made on benefit entitlement. Many of the providers we spoke with confirmed that the processing is not carried out using AI or machine learning but with what they describe as a simple algorithm to reduce administrative workload, rather than making any decisions of consequence.”

He added that it was not the role of the ICO to endorse or ban a technology, but as the use of AI in everyday life increases it had an opportunity to ensure it does not expand without due regard for data protection, fairness and the rights of individuals.

“While we did not find evidence of discrimination or unlawful usage in this case, we understand that these concerns exist,” Bonner said.

He suggested that, in order to alleviate concerns around the fairness of these technologies, as well as remaining compliant with data protection legislation, there were a number of practical steps that local authorities and central government can take when using algorithms or AI.

- Take a data protection by design and default approach: “As a data controller, local authorities are responsible for ensuring that their processing complies with the UK GDPR. That means having a clear understanding of what personal data is being held and why it is needed, how long it is kept for, and erase it when it is no longer required. Data processed using algorithms, data analytics or similar systems should be reactively and proactively reviewed to ensure it is accurate and up to date. This includes any processing carried out by an organisation or company on their behalf. If a local authority decides to engage a third party to process personal data using algorithms, data analytics or AI, they are responsible for assessing that they are competent to process personal data in line with the UK GDPR.”

- Be transparent with people about how they are using their data: “Local authorities should regularly review their privacy policies, and identify areas for improvement. There are some types of information that organisations must always provide, while the provision of other types of information depends on the particular circumstances of the organisation, and how and why people’s personal data is used. They should also bring any new uses of an individual’s personal data to their attention.”

- Identify the potential risks to people’s privacy: “Local authorities should consider conducting a Data Protection Impact Assessment (DPIA) to help identify and minimise the data protection risks of using algorithms, AI or data analytics. A DPIA should consider compliance risks, but also broader risks to the rights and freedoms of people, including the potential for any significant social or economic disadvantage. Our DPIA checklist can help when carrying out this screening exercise.”

Bonner said: “The potential benefits of AI are plain to see. It can streamline processes, reduce costs, improve services and increase staff power. Yet the economic and societal benefits of these innovations are only possible by maintaining the trust of the public. It is important that where local authorities use AI, it is employed in a way that is fair, in accordance with the law, and repays the trust that the public put in them when they hand their data over.

“We will continue to work with and support the public sector to ensure that the use of AI is lawful, and that a fair balance is struck between their own purposes and the interests and rights of the public.”

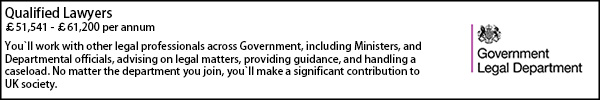

Legal Director - Government and Public Sector

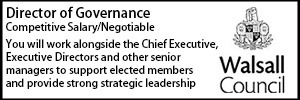

Head of Legal

Poll